Ten! Alamy/Insidefoto

Humans are finite creatures. Our brains have a finite number of neurons and we interact with a finite number of people during our finite lifetime. Yet humans have the remarkable ability to conceive of the infinite.

This ability underlies Euclid’s proof that there are infinite prime numbers as well as the belief of billions that their gods are infinite beings, free of mortal constraints.

These ideas will be well known to Pope Leo XIV since before his life in the church, he trained as a mathematician. Leo’s trajectory is probably no coincidence since there is a connection between mathematics and theology.

Infinity is undoubtedly of central importance to both. Virtually all mathematical objects, such as numbers or geometric shapes, form infinite collections. And theologians frequently describe God as a unique, absolutely infinite being.

Despite using the same word, though, there has traditionally been a vast gap between how mathematicians and theologians conceptualise infinity. From antiquity until the 19th century, mathematicians have believed that there are infinitely many numbers, but – in contrast to theologians – firmly rejected the idea of the absolute infinite.

The idea roughly is this: surely, there are infinitely many numbers, since we can always keep counting. But each number itself is finite – there are no infinite numbers. What is rejected is the legitimacy of the collection of all numbers as a closed object in its own right. For the existence of such a collection leads to logical paradoxes.

A paradox of the infinite

The most simple example is a version of Galileo’s paradox and leads to seemingly contradictory statements about the natural numbers 1,2,3….

First, observe that some numbers are even, while others are not. Hence, the numbers – even and odd – must be more numerous than just the even numbers 2,4,6…. And yet, for every number there is exactly one even number. To see this, simply multiply any given number by 2.

But then there cannot be more numbers than there are even numbers. We thus arrive at the contradictory conclusion that numbers are more numerous than the even numbers, while at the same time there are not more numbers than there are even numbers.

Because of such paradoxes, mathematicians rejected actual infinities for millennia. As a result, mathematics was concerned with a much tamer concept of infinity than the absolute one used by theologians. This situation dramatically changed with mathematician Georg Cantor’s introduction of transfinite set theory in the second half of the 19th century.

Georg Cantor, mathematical rebel. Wikipedia

Cantor’s radical idea was to introduce, in a mathematically rigorous way, absolute infinities to the realm of mathematics. This innovation revolutionised the field by delivering a powerful and unifying theory of the infinite. Today, set theory provides the foundations of mathematics, upon which all other subdisciplines are built.

According to Cantor’s theory, two sets – A and B – have the same size if their elements stand in a one-to-one correspondence. This means that each element of A can be related to a unique element of B, and vice versa.

Think of sets of husbands and wives respectively, in a heterosexual, monogamous society. These sets can be seen to have the same size, even though we might not be able to count each husband and wife.

The reason is that the relation of marriage is one-to-one. For each husband there is a unique wife, and conversely, for each wife there is a unique husband.

Using the same idea, we have seen above that in Cantor’s theory, the set of numbers – even and odd – has the same size as the set of even numbers. And so does the set of integers, which includes negative numbers, and the set of rational numbers, which can be written as fractions.

The most striking feature of Cantor’s theory is that not all infinite sets have the same size. In particular, Cantor showed that the set of real numbers, which can be written as infinite decimals, must be strictly larger than the set of integers.

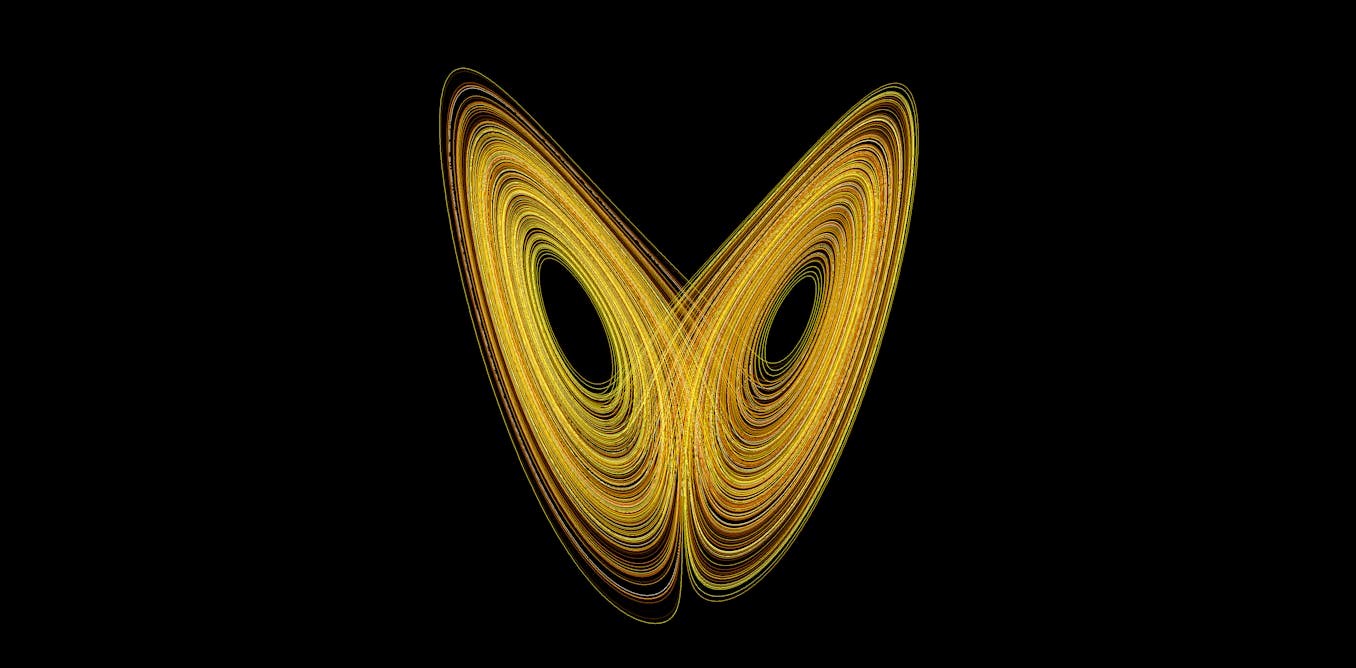

The set of real numbers, in turn, is smaller than even larger infinities, and so on. To measure the size of infinite sets, Cantor introduced so-called transfinite numbers.

The ever-increasing series of transfinite numbers is denoted by Aleph, the first letter of the Hebrew alphabet, whose mystic nature has been explored by philosophers, theologians and poets alike.

Set theory and Pope Leo XIII

For Cantor, a devout Lutheran Christian, the motivation and justification of his theory of absolute infinities was directly inspired by religion. In fact, he was convinced that the transfinite numbers were communicated to him by God. Moreover, Cantor was deeply concerned about the consequences of his theory for Catholic theology.

Pope Leo XIII, Cantor’s contemporary, encouraged theologians to engage with modern science, to show that the conclusions of science were compatible with religious doctrine. In his extensive correspondence with Catholic theologians, Cantor went to great lengths to argue that his theory does not challenge the status of God as the unique actual infinite being.

On the contrary, he understood his transfinite numbers as increasing the extent of God’s nature, as a “pathway to the throne of God”. Cantor even addressed a letter and several notes on this topic to Leo XIII himself.

Pope Leo XIII. Wikipedia/Braun et Compagnie

For Cantor, absolute infinities lie at the intersection of mathematics and theology. It is striking to consider that one of the most fundamental revolutions in the history of mathematics, the introduction of absolute infinities, was so deeply entangled with religious concerns.

Pope Leo XIV has been explicit that Leo XIII was his inspiration for his choice of pontifical name. Perhaps among an infinite number of potential reasons for the choice, this mathematical link was one.

For more such insights, log into www.international-maths-challenge.com.

*Credit for article given to Balthasar Grabmayr*