Both arithmetic aficionados and the mathematically challenged will be equally captivated by new research that upends hundreds of years of popular belief about prime numbers.

Contrary to what just about every mathematician on Earth will tell you, prime numbers can be predicted, according to researchers at City University of Hong Kong (CityUHK) and North Carolina State University, U.S.

The research team comprises Han-Lin Li, Shu-Cherng Fang, and Way Kuo. Fang is the Walter Clark Chair Professor of Industrial and Systems Engineering at North Carolina State University. Kuo is a Senior Fellow at the Hong Kong Institute for Advanced Study, CityU.

This is a genuinely revolutionary development in prime number theory, says Way Kuo, who is working on the project alongside researchers from the U.S. The team leader is Han-Lin Li, a Visiting Professor in the Department of Computer Science at CityUHK.

We have known for millennia that an infinite number of prime numbers, i.e., 2, 3, 5, 7, 11, etc., can be divided by themselves and the number 1 only. But until now, we have not been able to predict where the next prime will pop up in a sequence of numbers. In fact, mathematicians have generally agreed that prime numbers are like weeds: they seem just to shoot out randomly.

“But our team has devised a way to predict accurately and swiftly when prime numbers will appear,” adds Kuo.

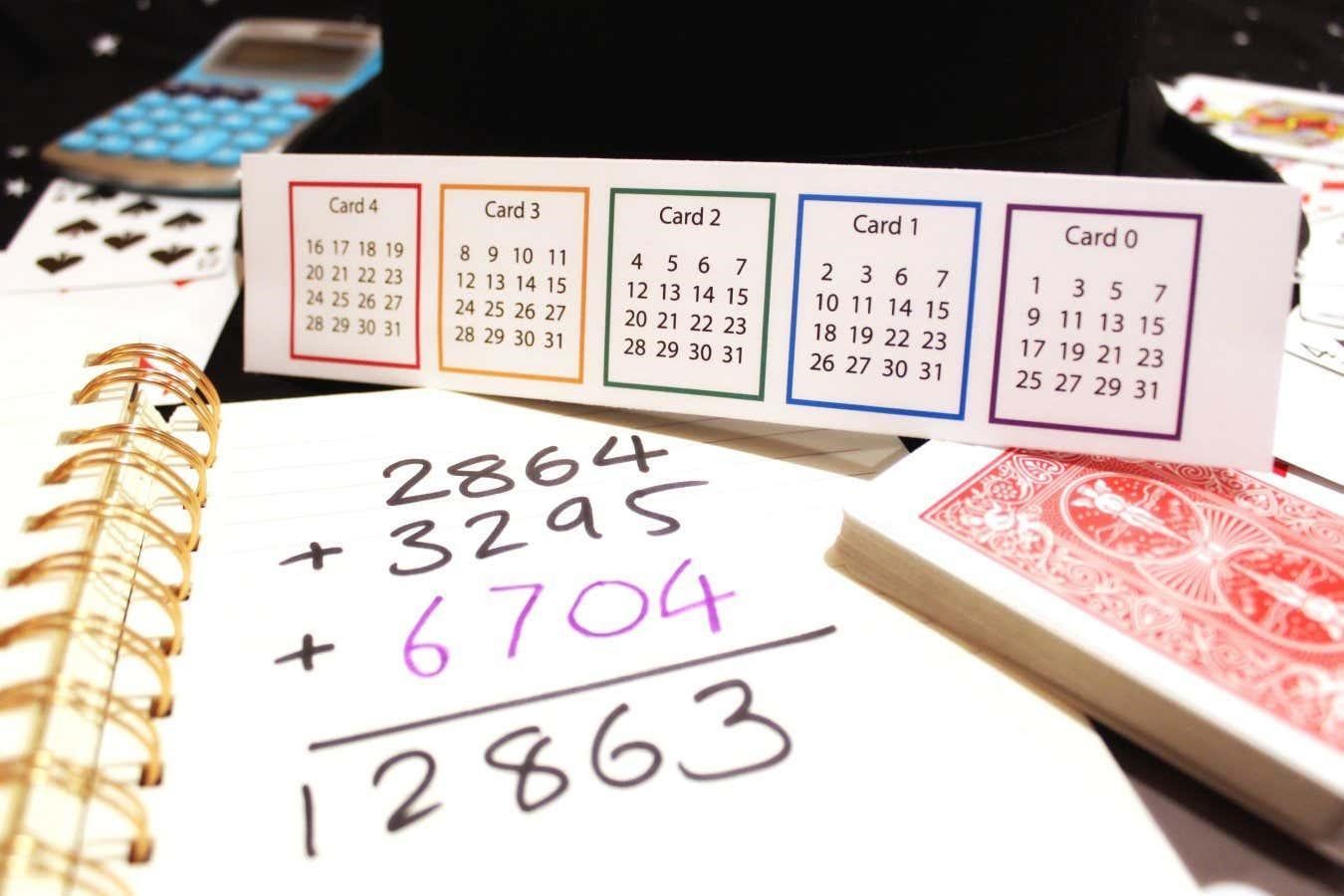

The technical aspects of the research are daunting for all but a handful of mathematicians worldwide. In a nutshell, the outcome of the team’s research is a handy periodic table of primes, or the PTP, pointing the locations of prime numbers. The research is available as a working paper in the SSRN Electronic Journal.

The PTP can be used to shed light on finding a future prime, factoring an integer, visualizing an integer and its factors, identifying locations of twin primes, predicting the total number of primes and twin primes or estimating the maximum prime gap within an interval, among others.

More to the point, the PTP has major applications today in areas such as cyber security. Primes are already a fundamental part of encryption and cryptography, so this breakthrough means data can be made much more secure if we can predict prime numbers, Kuo explains.

This advance in prime number research stemmed from working on systems reliability design and a color coding system that uses prime numbers to enable efficient encoding and more effective color compression. During their research, the team discovered that their calculations could be used to predict prime numbers.

For more such insights, log into our website https://international-maths-challenge.com

Credit of the article given to Michael Gibb, City University of Hong Kong