There is more to where maths came from than the ancient Greeks. From calculus to the theorem we credit to Pythagoras, so much of our knowledge comes from other places, including ancient China, India and the Arabian peninsula, says Kate Kitagawa.

The history of mathematics has an image problem. It is often presented as a meeting of minds among ancient Greeks who became masters of logic. Pythagoras, Euclid and their pals honed the tools for proving theorems and that led them to the biggest results of ancient times. Eventually, other European greats like Leonhard Euler and Isaac Newton came along and made maths modern, which is how we got to where we are today.

But, of course, this telling is greatly distorted. The history of maths is far richer, more chaotic and more diverse than it is given credit for. So much of what is now incorporated into our global knowledge comes from other places, including ancient China, India and the Arabian peninsula.

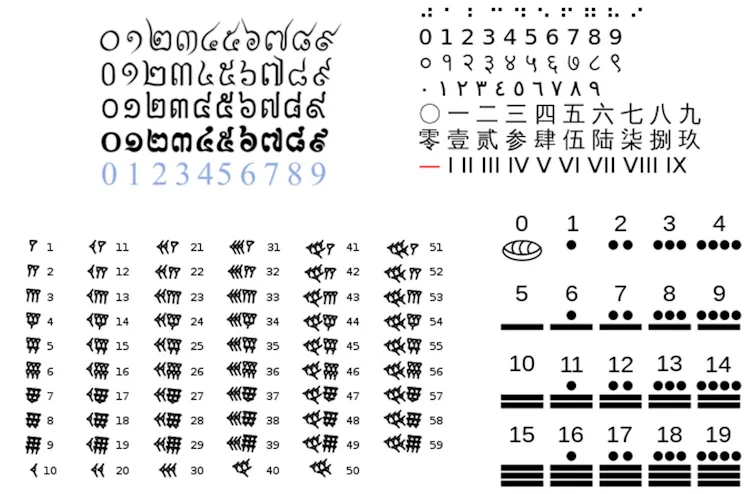

Take “Pythagoras’s” theorem. This is the one that says that in right-angled triangles, the square of the longest side is the sum of the square of the other two sides. The ancient Greeks certainly knew about this theorem, but so too did mathematicians in ancient Babylonia, Egypt, India and China.

In fact, in the 3rd century AD, Chinese mathematician Liu Hui added a proof of the theorem to the already old and influential book The Nine Chapters on the Mathematical Art. His version includes the earliest written statement of the theorem that we know of. So perhaps we should really call it Liu’s theorem or the gougu theorem as it was known in China.

The history of maths is filled with tales like this. Ideas have sprung up in multiple places at multiple times, leaving room for interpretation as to who should get the credit. As if credit is something that can’t be split.

As a researcher on the history of maths, I had come across examples of distorted views, but it was only when working on a new book, The Secret Lives of Numbers, that I found out just how pervasive they are. Along with my co-author, New Scientist‘s Timothy Revell, we found that the further we dug, the more of the true history of maths there was to uncover.

Another example is the origins of calculus. This is often presented as a battle between Newton and Gottfried Wilhelm Leibniz, two great 17th-century European mathematicians. They both independently developed extensive theories of calculus, but missing from the story is how an incredible school in Kerala, India, led by the mathematician Mādhava, hit upon some of the same ideas 300 years before.

The idea that the European way of doing things is superior didn’t originate in maths – it came from centuries of Western imperialism – but it has infiltrated it. Maths outside ancient Greece has often been put to one side as “ethnomathematics”, as if it were a side story to the real history.

In some cases, history has also distorted legacies. Sophie Kowalevski, who was born in Moscow in 1850, is now a relatively well-known figure. She was a fantastic mathematician, known for tackling a problem she dubbed a “mathematical mermaid” for its allure. The challenge was to describe mathematically how a spinning top moves, and she made breakthroughs where others had faltered.

During her life, she was constantly discouraged from pursuing maths and often had to work for free, collecting tuition money from her students in order to survive. After her death, biographers then tainted her life, painting her as a femme fatale who relied on her looks, implying she effectively passed off others’ work as her own. There is next to no evidence this is true.

Thankfully, historians of mathematics are re-examining and correcting the biases and stereotypes that have plagued the field. This is an ongoing process, but by embracing its diverse and chaotic roots, the next chapters for maths could be the best yet.

For more such insights, log into www.international-maths-challenge.com.

*Credit for article given to Kate Kitagawa *