The springtime emergence of vast swarms of cicadas can be explained by a mathematical model of collective decision-making that has similarities to models describing stock market crashes.

Pick almost any location in the eastern United States—say, Columbus Ohio. Every 13 or 17 years, as the soil warms in springtime, vast swarms of cicadas emerge from their underground burrows singing their deafening song, take flight and mate, producing offspring for the next cycle.

This noisy phenomenon repeats all over the eastern and southeastern U.S. as 17 distinct broods emerge in staggered years. In spring 2024, billions of cicadas are expected as two different broods—one that appears every 13 years and another that appears every 17 years—emerge simultaneously.

Previous research has suggested that cicadas emerge once the soil temperature reaches 18°C, but even within a small geographical area, differences in sun exposure, foliage cover or humidity can lead to variations in temperature.

Now, in a paper published in the journal Physical Review E, researchers from the University of Cambridge have discovered how such synchronous cicada swarms can emerge despite these temperature differences.

The researchers developed a mathematical model for decision-making in an environment with variations in temperature and found that communication between cicada nymphs allows the group to come to a consensus about the local average temperature that then leads to large-scale swarms. The model is closely related to one that has been used to describe “avalanches” in decision-making like those among stock market traders, leading to crashes.

Mathematicians have been captivated by the appearance of 17- and 13-year cycles in various species of cicadas, and have previously developed mathematical models that showed how the appearance of such large prime numbers is a consequence of evolutionary pressures to avoid predation. However, the mechanism by which swarms emerge coherently in a given year has not been understood.

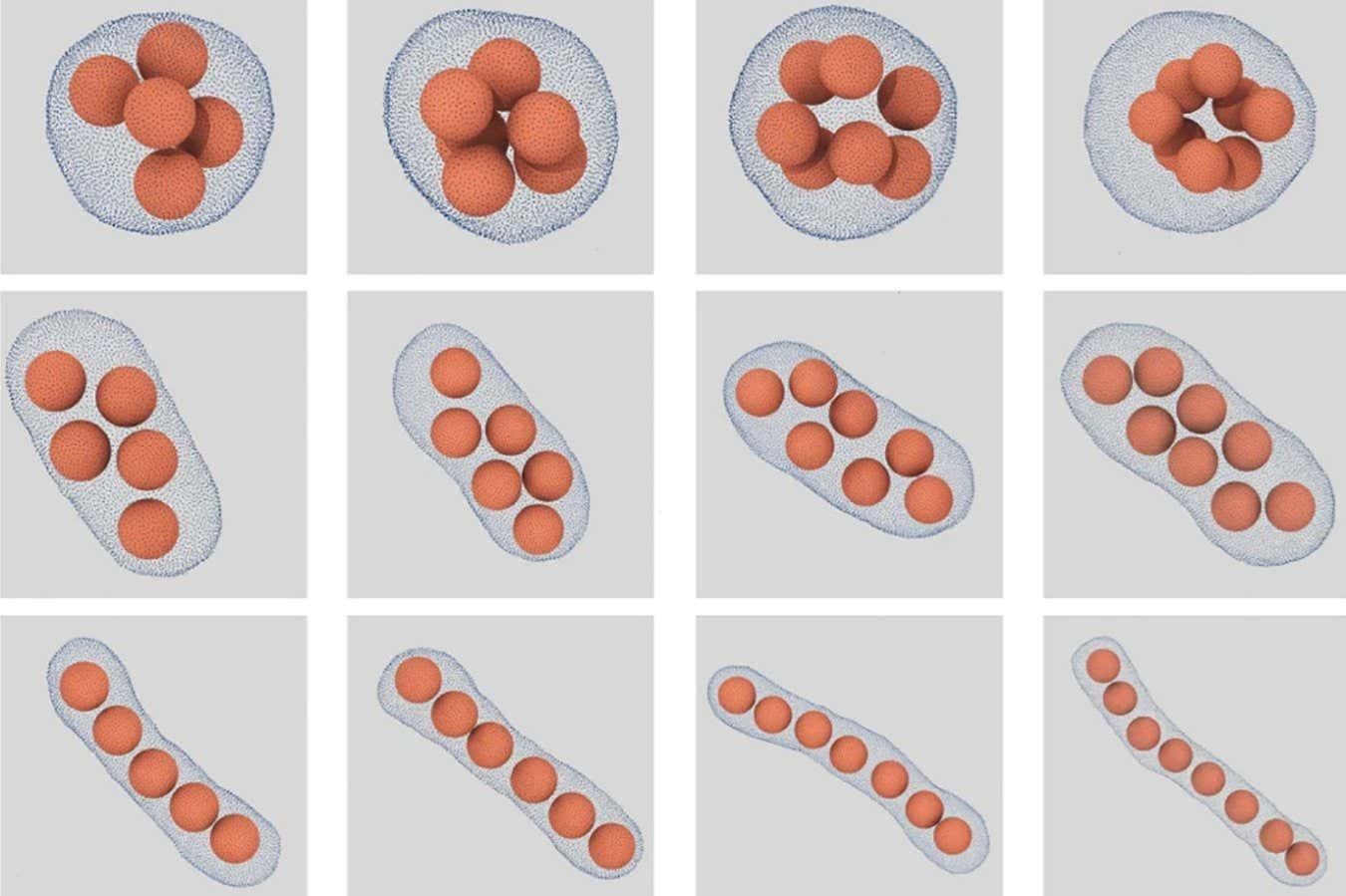

In developing their model, the Cambridge team was inspired by previous research on decision-making that represents each member of a group by a “spin” like that in a magnet, but instead of pointing up or down, the two states represent the decision to “remain” or “emerge.”

The local temperature experienced by the cicadas is then like a magnetic field that tends to align the spins and varies slowly from place to place on the scale of hundreds of meters, from sunny hilltops to shaded valleys in a forest. Communication between nearby nymphs is represented by an interaction between the spins that leads to local agreement of neighbours.

The researchers showed that in the presence of such interactions the swarms are large and space-filling, involving every member of the population in a range of local temperature environments, unlike the case without communication in which every nymph is on its own, responding to every subtle variation in microclimate.

The research was carried out Professor Raymond E Goldstein, the Alan Turing Professor of Complex Physical Systems in the Department of Applied Mathematics and Theoretical Physics (DAMTP), Professor Robert L Jack of DAMTP and the Yusuf Hamied Department of Chemistry, and Dr. Adriana I Pesci, a Senior Research Associate in DAMTP.

“As an applied mathematician, there is nothing more interesting than finding a model capable of explaining the behaviour of living beings, even in the simplest of cases,” said Pesci.

The researchers say that while their model does not require any particular means of communication between underground nymphs, acoustical signaling is a likely candidate, given the ear-splitting sounds that the swarms make once they emerge from underground.

The researchers hope that their conjecture regarding the role of communication will stimulate field research to test the hypothesis.

“If our conjecture that communication between nymphs plays a role in swarm emergence is confirmed, it would provide a striking example of how Darwinian evolution can act for the benefit of the group, not just the individual,” said Goldstein.

For more such insights, log into our website https://international-maths-challenge.com

Credit of the article given to Sarah Collins, University of Cambridge