The market for collectible digital assets, or non-fungible tokens, is an interesting example of a physical system with a large scale of complexity, non-trivial dynamics, and an original logic of financial transactions. At the Institute of Nuclear Physics of the Polish Academy of Sciences (IFJ PAN) in Cracow, its global statistical features have been analysed more extensively.

In the past, the value of money was determined by the amount of precious metals it contained. Today, we attribute it to certain sequences of digital zeros and ones, simply agreeing that they correspond to coins or banknotes. Non-fungible tokens (NFTs) operate by a similar convention: their owners assign a measurable value to certain sets of ones and zeros, treating them as virtual equivalents of assets such as works of art or properties.

NFTs are closely linked to the cryptocurrency markets but change their holders in a different way to, for example, bitcoins. While each bitcoin is exactly the same and has the same value, each NFT is a unique entity with an individually determined value, integrally linked to information about its current owner.

“Trading in digital assets treated in this way is not guided by the logic of typical currency markets, but by the logic of markets trading in objects of a collector’s nature, such as paintings by famous painters,” explains Prof. Stanislaw Drozdz (IFJ PAN, Cracow University of Technology.)

“We have already become familiar with the statistical characteristics of cryptocurrency markets through previous analyses. The question of the characteristics of a new, very young and at the same time fundamentally different market, also built on blockchain technology, therefore arose very naturally.”

The market for NFTs was initiated in 2017 with the blockchain created for the Ethereum cryptocurrency. The popularization of the idea and the rapid growth of trading took place during the pandemic. At that time, a record-breaking transaction was made at an auction organized by the famous English auction house Christie’s, when the art token Everyday: The First 5000 Days, created by Mike Winkelmann, was sold for $69 million.

Tokens are generally grouped into collections of different sizes, and the less frequently certain characteristics of a token occur in a collection, the higher its value tends to be. Statisticians from IFJ PAN examined publicly available data from the CryptoSlam (cryptoslam.io) and Magic Eden (magiceden.io) portals on five popular collections running on the Solana cryptocurrency blockchain.

These were sets of images and animations known as Blocksmith Labs Smyths, Famous Fox Federation, Lifinity Flares, Okay Bears, and Solana Monkey Business, each containing several thousand tokens with an average transaction value of close to a thousand dollars.

“We focused on analysing changes in the financial parameters of a collection such as its capitalization, minimum price, the number of transactions executed on individual tokens per unit of time (hour), the time interval between successive transactions, or the value of transaction volume. The data covered the period from the launch date of a particular collection up to and including August 2023,” says Dr. Marcin Watorek (PK).

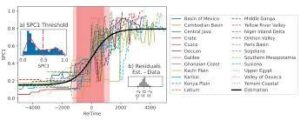

For stabilized financial markets, the presence of certain power laws is characteristic, signaling that the likelihood of large events occurring is greater than would result from a typical Gaussian probability distribution. It appears that the operation of such laws is already evident in the fluctuations of NFT market parameters, for example, in the distribution of times between individual trades or in volume fluctuations.

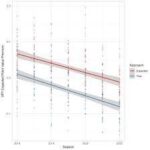

Among the statistical parameters analysed by the researchers from the IFJ PAN was the Hurst exponent, which describes the reluctance of a system to change its trend. The value of this exponent falls below 0.5 when the system has a tendency to fluctuate: all rises increase the probability of a decrease (or vice versa).

In contrast, values above 0.5 indicate the existence of a certain long-term memory: after a rise, there is a higher probability of another rise; after a fall, there is a higher probability of another fall. For the token collections studied, the values of the Hurst exponent were between 0.6 and 0.8, thus at a level characteristic of highly reputable markets. In practice, this property means that the trading prices of tokens from a given collection fluctuate in a similar manner in many cases.

The existence of a certain long-term memory of the system, reaching up to two months in the NFT market, may indicate the presence of multifractality. When we start to magnify a fragment of an ordinary fractal, sooner or later, we see a structure resembling the initial object, always after using the same magnification. Meanwhile, in the case of multifractals, their different fragments have to be magnified at different speeds.

It is precisely this non-linear nature of self-similarity that has also been observed in the digital collectors’ market, among others, for minimum prices, numbers of transactions per unit of time, and intervals between transactions. However, this multifractality was not fully developed and was best revealed in those situations where the greatest fluctuations were observed in the system under study.

“Our research also shows that the price of the cryptocurrency for which collections are sold directly affects the volume they generate. This is an important observation, as cryptocurrency markets are already known to show many signs of statistical maturity,” notes Pawel Szydlo, first author of the article in Chaos: An Interdisciplinary Journal of Nonlinear Science.

The analyses carried out at IFJ PAN lead to the conclusion that, despite its young age and slightly different trading mechanisms, the NFT market is beginning to function in a manner that is statistically similar to established financial markets. This fact seems to indicate the existence of a kind of universalism among financial markets, even of a significantly different nature. However, its closer understanding will require further research.

For more such insights, log into our website https://international-maths-challenge.com

Credit of the article given to Polish Academy of Sciences