The first commercial gambling operations emerged, coincidentally or not, at the same time as the study of mathematical probability in the mid-1600s.

By the early 1700s, commercial gambling operations were widespread in European cities such as London and Paris. But in many of the games that were offered, players faced steep odds.

Then, in 1713, the brothers Johann and Jacob Bernoulli proved their “Golden Theorem,” known now as the law of large numbers or long averages.

But gambling entrepreneurs were slow to embrace this theorem, which showed how it could actually be an advantage for the house to have a smaller edge over a larger one.

The book “The Gambling Century: Commercial Gaming in Britain from Restoration to Regency,” WEexplain how it took government efforts to ban and regulate betting for gambling operators to finally understand just how much money could be made off a miniscule house edge.

The illusion of even odds in games that were the ancestors of roulette and blackjack proved immensely profitable, sparking a “probability revolution” that transformed gambling in Britain and beyond.

A new theorem points to sneaky big profits

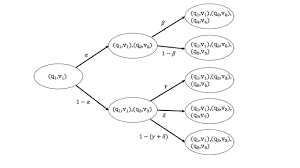

The law of large numbers refers to events governed by chance.

When you flip a coin, for example, you have a 50% – or “even money” – chance of getting heads or tails. Were you to flip a coin 10 times, it’s quite possible that heads will turn up seven times and tails three times. But after 100, or 1000, or 10,000 flips, the ratio of “heads” to “tails” will be closer and closer to the mathematical “mean of probability” – that is, half heads and half tails.

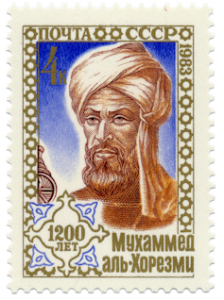

Mathematicians Johann and Jacob Bernoulli developed what’s known today as the law of large numbers. Oxford Science Archive/Print Collector via Getty Images

This principle was popularized by writers such as Abraham De Moivre, who applied them to games of chance.

De Moivre explained how, over time, someone with even the smallest statistical “edge” would eventually win almost all of the money that was staked.

This is what happens in roulette. The game has 36 numbers, 18 of which are red and 18 of which are black. However, there are also two green house numbers – “0” and “00” – which, if the ball lands on them, means that the house can take everyone’s wager. This gives the house a small edge.

Imagine 10 players with $100 apiece. Half of them bet $10 on red and the other half bet $10 on black. Assuming that the wheel strictly aligns with the mean of probability, the house will break even for 18 of 19 spins. But on the 19th spin, the ball will land on one of the green “house numbers,” allowing the house to collect all the money staked from all bettors.

After 100 spins, the house will have won half of the players’ money. After 200 spins, they’ll have won all of it.

Even with a single house number – the single 0 on the roulette wheels introduced in Monte Carlo by the casino entrepreneur Louis Blanc – the house would win everything after 400 spins.

This eventuality, as De Moivre put it, “will seem almost incredible given the smallness of the odds.”

Hesitating to test the math

As De Moivre anticipated, gamblers and gambling operators were slow to adopt these findings.

De Moivre’s complex mathematical equations were over the heads of gamblers who hadn’t mastered simple arithmetic.

Gambling operators didn’t initially buy into the Golden Theorem, either, seeing it as unproven and therefore risky.

Instead, they played it safe by promoting games with long odds.

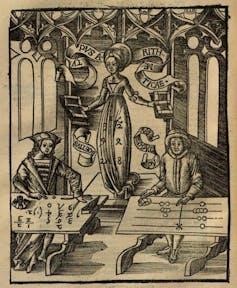

One was the Royal Oak Lottery, a game played with a polyhedral die with 32 faces, like a soccer ball. Players could bet on individual numbers or combinations of two or four numbers, giving them, at best, 7-to-1 odds of winning.

Faro was another popular game of chance in which the house, or “bank” as it was then known, gave players the opportunity to defer collecting their winnings for chances at larger payouts at increasingly steep odds.

Faro was a popular game of chance in which players could delay collecting their winnings for the chance to win even bigger sums. Boston Public Library

These games – and others played against a bank – were highly profitable to gambling entrepreneurs, who operated out of taverns, coffeehouses and other similar venues. “Keeping a common gaming house” was illegal, but with the law riddled with loopholes, enforcement was lax and uneven.

Public outcry against the Royal Oak Lottery was such that the Lottery Act of 1699 banned it. A series of laws enacted in the 1730s and 1740s classified faro and other games as illegal lotteries, on the grounds that the odds of winning or losing were not readily apparent to players.

The law of averages put into practice

Early writers on probability had asserted that the “house advantage” did not have to be very large for a gambling operation to profit enormously. The government’s effort to ban games of chance now obliged gaming operators to put the law of long averages into practice.

Further statutes outlawed games of chance played with dice, cards, wheels or any other device featuring “numbers or figures.”

None of these measures deterred gambling operators from the pursuit of profit.

Since this language did not explicitly include letters, the game of EO, standing for “even odd,” was introduced in the mid 1740s, after the last of these gambling statutes was enacted. It was played on a wheel with 40 slots, all but two of which were marked either “E” or “O.” As in roulette, an ivory ball was rolled along the edge of the wheel as it was spun. If the ball landed in one of the two blank “bar holes,” the house would automatically win, similar to the “0” and “00” in roulette.

EO’s defenders could argue that it was not an unlawful lottery because the odds of winning or losing were now readily apparent to players and appeared to be virtually equal. The key, of course, is that the bar holes ensured they weren’t truly equal.

Although this logic might not stand up in court, overburdened law enforcement was happy for a reason to look the other way. EO proliferated; legislation to outlaw it was proposed in 1782 but failed.

In the 19th century, roulette became a big draw at Monte Carlo’s casinos.Hulton Archive/Getty Images

The allure of ‘even money’

Gambling operators may have even realized that evening the odds drew more players, who, in turn, staked more.

After EO appeared in Britain, gambling operations both there and on the continent of Europe introduced “even money” betting options into both new and established games.

For example, the game of biribi, which was popular in France throughout the 18th century, involved players betting on numbers from 1 to 72, which were shown on a betting cloth. Numbered beads would then be drawn from a bag to determine the win.

In one iteration from around 1720, players could bet on individual numbers, on vertical columns of six numbers, or other options that promised large payouts against steeper odds.

By the end of the 18th century, however, one biribi cloth featured even money options: Players could bet on any number between 36 and 70 being drawn, or on any number between 1 and 35. Players could also select red or black numbers, making it a likely inspiration for roulette.

In Britain, the Victorian ethos of morality and respectabilityeventually won out. Parliament outlawed games of chance played for money in public or private in 1845, restrictions that were not lifted until 1960.

By 1845, however, British gamblers could travel by steamship and train to one of the many European resorts cropping up across the continent, where the probability revolution had transformed casino gambling into the formidable business enterprise it is today.

For more such insights, log into our website https://international-maths-challenge.com

Credit of the article given to The Conversation