You can describe the shape you live on in multiple dimensions. vkulieva/iStock via Getty Images Plus

When you look at your surrounding environment, it might seem like you’re living on a flat plane. After all, this is why you can navigate a new city using a map: a flat piece of paper that represents all the places around you. This is likely why some people in the past believed the earth to be flat. But most people now know that is far from the truth.

You live on the surface of a giant sphere, like a beach ball the size of the Earth with a few bumps added. The surface of the sphere and the plane are two possible 2D spaces, meaning you can walk in two directions: north and south or east and west.

What other possible spaces might you be living on? That is, what other spaces around you are 2D? For example, the surface of a giant doughnut is another 2D space.

Through a field called geometric topology, mathematicians like me study all possible spaces in all dimensions. Whether trying to design secure sensor networks, mine data or use origami to deploy satellites, the underlying language and ideas are likely to be that of topology.

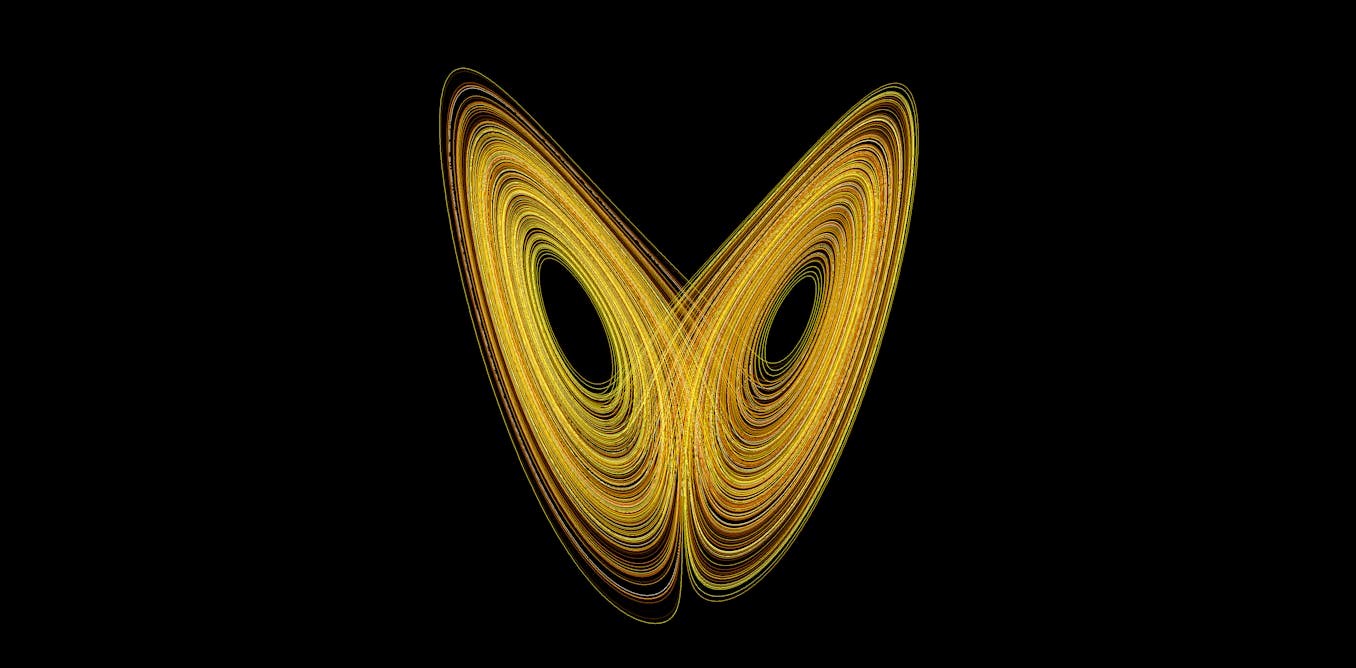

The shape of the universe

When you look around the universe you live in, it looks like a 3D space, just like the surface of the Earth looks like a 2D space. However, just like the Earth, if you were to look at the universe as a whole, it could be a more complicated space, like a giant 3D version of the 2D beach ball surface or something even more exotic than that.

A doughnut, also called a torus, is a shape that you can move across in two directions, just like the surface of the Earth. YassineMrabet via Wikimedia Commons, CC BY-NC-SA

While you don’t need topology to determine that you are living on something like a giant beach ball, knowing all the possible 2D spaces can be useful. Over a century ago, mathematicians figured out all the possible 2D spaces and many of their properties.

In the past several decades, mathematicians have learned a lot about all of the possible 3D spaces. While we do not have a complete understanding like we do for 2D spaces, we do know a lot. With this knowledge, physicists and astronomers can try to determine what 3D space people actually live in.

While the answer is not completely known, there are many intriguing and surprising possibilities. The options become even more complicated if you consider time as a dimension.

To see how this might work, note that to describe the location of something in space – say a comet – you need four numbers: three to describe its position and one to describe the time it is in that position. These four numbers are what make up a 4D space.

Now, you can consider what 4D spaces are possible and in which of those spaces do you live.

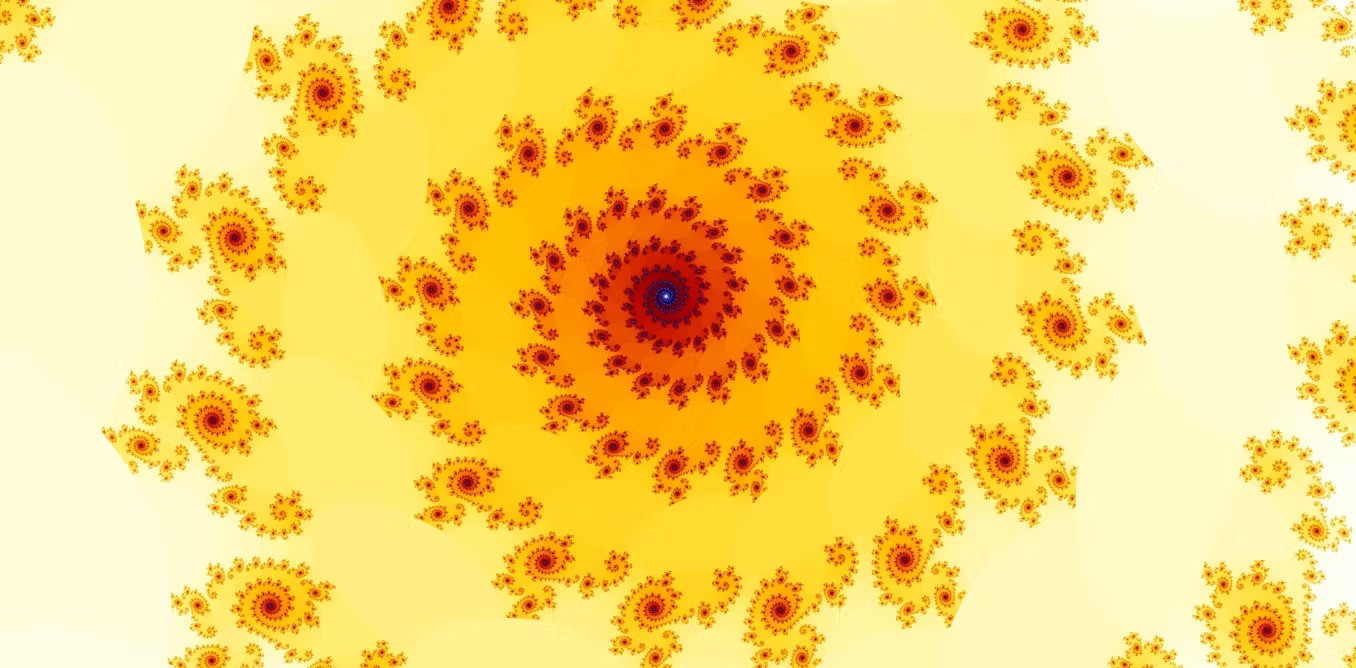

Topology in higher dimensions

At this point, it may seem like there is no reason to consider spaces that have dimensions larger than four, since that is the highest imaginable dimension that might describe our universe. But a branch of physics called string theory suggests that the universe has many more dimensions than four.

There are also practical applications of thinking about higher dimensional spaces, such as robot motion planning. Suppose you are trying to understand the motion of three robots moving around a factory floor in a warehouse. You can put a grid on the floor and describe the position of each robot by their x and y coordinates on the grid. Since each of the three robots requires two coordinates, you will need six numbers to describe all of the possible positions of the robots. You can interpret the possible positions of the robots as a 6D space.

As the number of robots increases, the dimension of the space increases. Factoring in other useful information, such as the locations of obstacles, makes the space even more complicated. In order to study this problem, you need to study high-dimensional spaces.

There are countless other scientific problems where high-dimensional spaces appear, from modeling the motion of planets and spacecraft to trying to understand the “shape” of large datasets.

Tied up in knots

Another type of problem topologists study is how one space can sit inside another.

For example, if you hold a knotted loop of string, then we have a 1D space (the loop of string) inside a 3D space (your room). Such loops are called mathematical knots.

The study of knots first grew out of physics but has become a central area of topology. They are essential to how scientists understand 3D and 4D spaces and have a delightful and subtle structure that researchers are still trying to understand.

Knots are examples of spaces that sit inside other spaces. Jkasd/Wikimedia Commons

In addition, knots have many applications, ranging from string theory in physics to DNA recombination in biology to chirality in chemistry.

What shape do you live on?

Geometric topology is a beautiful and complex subject, and there are still countless exciting questions to answer about spaces.

For example, the smooth 4D Poincaré conjecture asks what the “simplest” closed 4D space is, and the slice-ribbon conjecture aims to understand how knots in 3D spaces relate to surfaces in 4D spaces.

Topology is currently useful in science and engineering. Unraveling more mysteries of spaces in all dimensions will be invaluable to understanding the world in which we live and solving real-world problems.

For more such insights, log into www.international-maths-challenge.com.

*Credit for article given to John Etnyre*