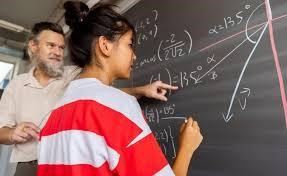

Atomic shapes are so simple that they can’t be broken down any further. Mathematicians are trying to build a “periodic table” of these shapes, and they hope artificial intelligence can help.

Mathematicians attempting to build a “periodic table” of shapes have turned to artificial intelligence for help – but say they don’t understand how it works or whether it can be 100 per cent reliable.

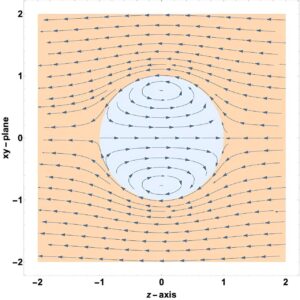

Tom Coates at Imperial College London and his colleagues are working to classify shapes known as Fano varieties, which are so simple that they can’t be broken down into smaller components. Just as chemists arranged elements in the periodic table by their atomic weight and group to reveal new insights, the researchers hope that organising these “atomic” shapes by their various properties will help in understanding them.

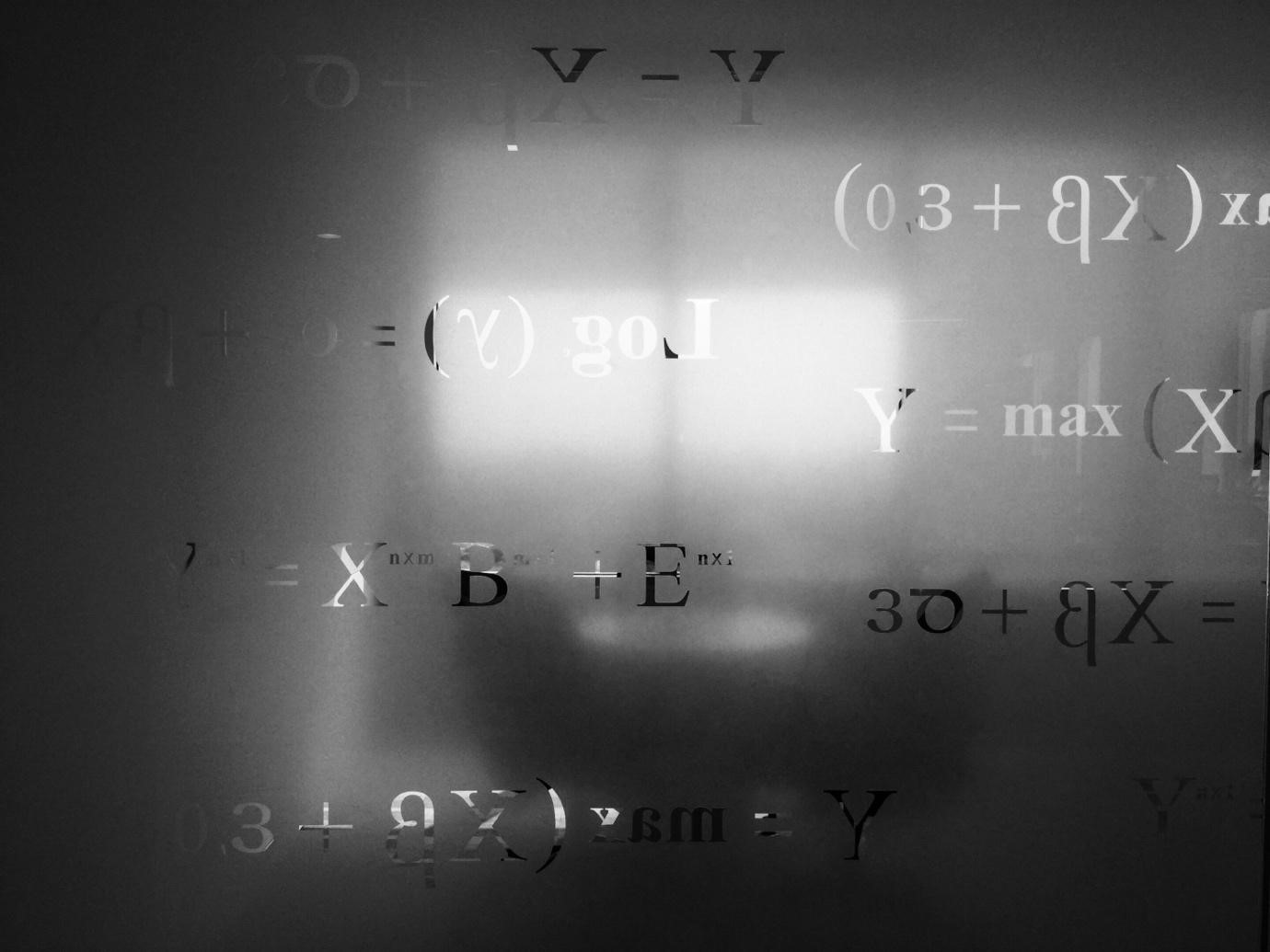

The team has assigned each atomic shape a sequence of numbers derived from features such as the number of holes it has or the extent to which it twists around itself. This acts as a bar code to identify it.

Coates and his colleagues have now created an AI that can predict certain properties of these shapes from their bar code numbers alone, with an accuracy of 98 per cent – suggesting a relationship that some mathematicians intuitively thought might be real, but have found impossible to prove.

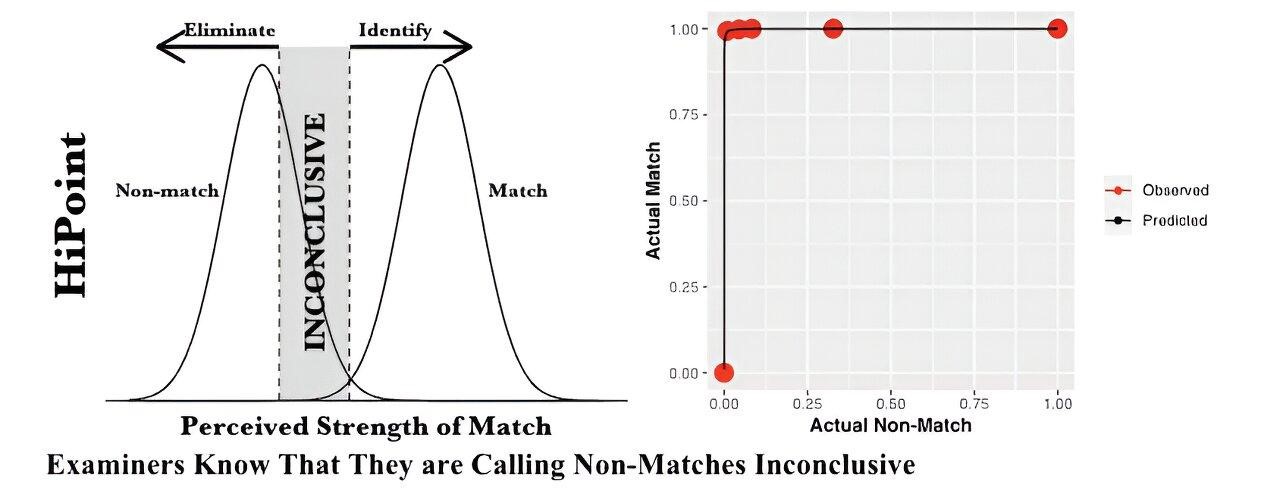

Unfortunately, there is a vast gulf between demonstrating that something is very often true and mathematically proving that it is always so. While the team suspects a one-to-one connection between each shape and its bar code, the mathematics community is “nowhere close” to proving this, says Coates.

“In pure mathematics, we don’t regard anything as true unless we have an actual proof written down on a piece of paper, and no advances in our understanding of machine learning will get around this problem,” says team member Alexander Kasprzyk at the University of Nottingham, UK.

Even without a proven link between the Fano varieties and bar codes, Kasprzyk says that the AI has let the team organise atomic shapes in a way that begins to mimic the periodic table, so that when you read from left to right, or up and down, there seem to be generalisable patterns in the geometry of the shapes.

“We had no idea that would be true, we had no idea how to begin doing it,” says Kasprzyk. “We probably would still not have had any idea about this in 50 years’ time. Frankly, people have been trying to study these things for 40 years and failing to get to a picture like this.”

The team hopes to refine the model to the point where missing spaces in its periodic table could point to the existence of unknown shapes, or where clustering of shapes could lead to logical categorisation, resulting in a better understanding and new ideas that could create a method of proof. “It clearly knows more things than we know, but it’s so mysterious right now,” says team member Sara Veneziale at Imperial College London.

Graham Niblo at the University of Southampton, UK, who wasn’t involved in the research, says that the work is akin to forming an accurate picture of a cello or a French horn just from the sound of a G note being played – but he stresses that humans will still need to tease understanding from the results provided by AI and create robust and conclusive proofs of these ideas.

“AI has definitely got uncanny abilities. But in the same way that telescopes didn’t put astronomers out of work, AI doesn’t put mathematicians out of work,” he says. “It just gives us a new tool that allows us to explore parts of the mathematical landscape that were out of reach, or, like a microscope, that were too obscure for us to notice with our current understanding.”

For more such insights, log into www.international-maths-challenge.com.

*Credit for article given to Matthew Sparkes *