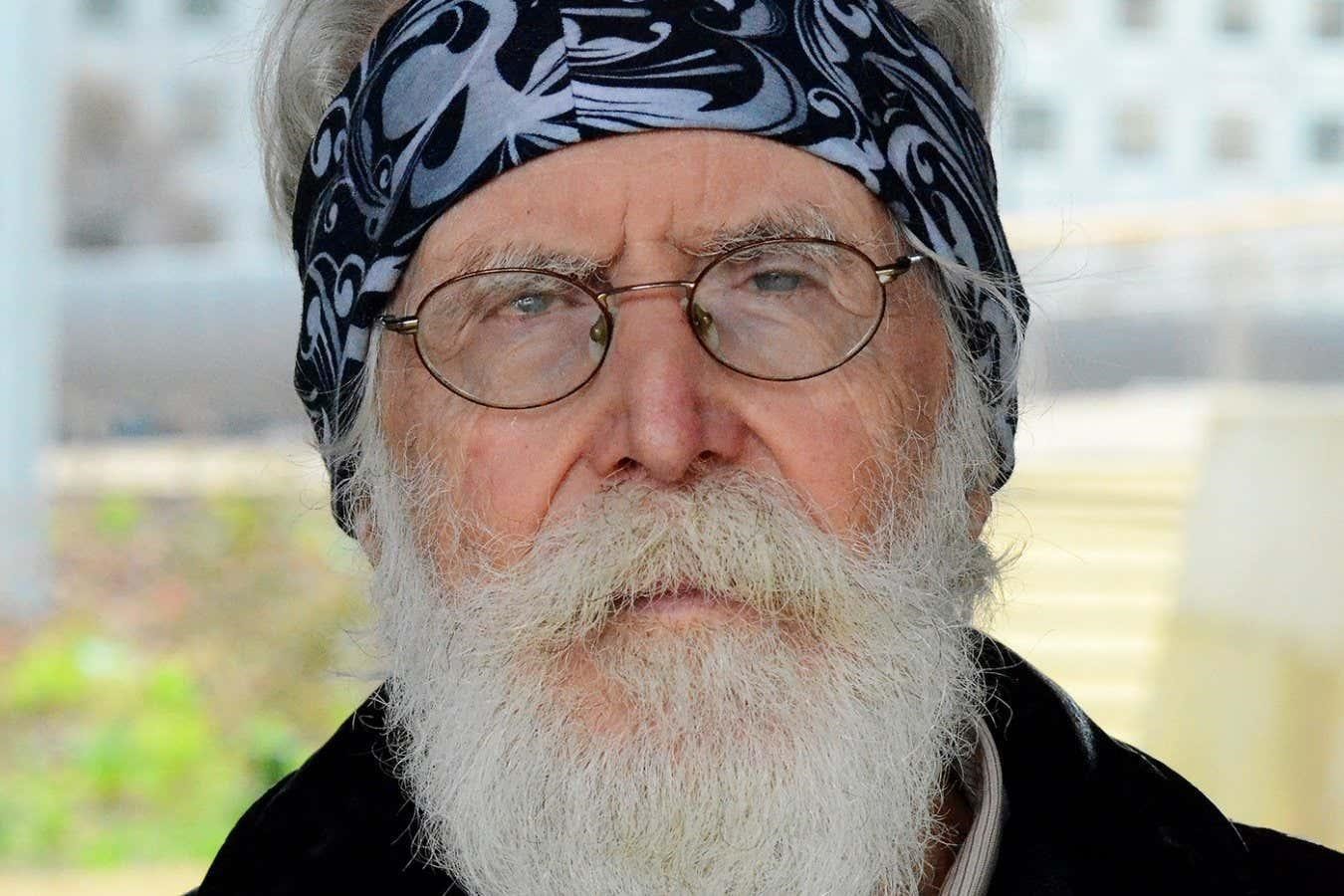

After losing an eye at the age of 5, the 2024 Abel prize winner Michel Talagrand found comfort in mathematics.

French mathematician Michel Talagrand has won the 2024 Abel prize for his work on probability theory and describing randomness. Shortly after he had heard the news, New Scientist spoke with Talagrand to learn more about his mathematical journey.

Alex Wilkins: What does it mean to win the Abel prize?

Michel Talagrand: I think everybody would agree that the Abel prize is really considered like the equivalent of the Nobel prize in mathematics. So it’s something for me totally unexpected, I never, ever dreamed I would receive this prize. And actually, it’s not such an easy thing to do, because there is this list of people who already received it. And on that list, they are true giants of mathematics. And it’s not such a comfortable feeling to sit with them, let me tell you, because it’s clear that their achievements are on an entirely other scale than I am.

What are your attributes as a mathematician?

I’m not able to learn mathematics easily. I have to work. It takes a very long time and I have a terrible memory. I forget things. So I try to work, despite handicaps, and the way I worked was trying to understand really well the simple things. Really, really well, in complete detail. And that turned out to be a successful approach.

Why does maths appeal to you?

Once you are in mathematics, and you start to understand how it works, it’s completely fascinating and it’s very attractive. There are all kinds of levels, you are an explorer. First, you have to understand what people before you did, and that’s pretty challenging, and then you are on your own to explore, and soon you love it. Of course, it is extremely frustrating at the same time. So you have to have the personality that you will accept to be frustrated.

But my solution is when I’m frustrated with something, I put it aside, when it’s obvious that I’m not going to make any more progress, I put it aside and do something else, and I come back to it at a later date, and I have used that strategy with great efficiency. And the reason why it succeeds is the function of the human brain, things mature when you don’t look at them. There are questions which I’ve literally worked on for a period of 30 years, you know, coming back to them. And actually at the end of the 30 years, I still made progress. That’s what is incredible.

How did you get your start?

Now, that’s a very personal story. First, it helps that my father was a maths teacher, and of course that helped. But really, the determining factor is I was unlucky to have been born with a deficiency in my retinas. And I lost my right eye when I was 5 years old. I had multiple retinal detachments when I was 15. I stayed in the hospital a long time, I missed school for six months. And that was extremely traumatic, I lived in constant terror that there will be a next retinal detachment.

To escape that, I started to study. And my father really immensely helped me, you know, when he knew how hard it was, and when I was in hospital, he came to see me every day and he started talking about some simple mathematics, just to keep my brain functioning. I started studying hard mathematics and physics to really, as I say, to fight the terror and, of course, when you start studying, then you become good at it and once you become good, it’s very appealing.

What is it like to be a professional mathematician?

Nobody tells me what I have to do and I’m completely free to use my time and do what I like. That fitted my personality well, of course, and it’s helped me to devote myself totally to my work.

For more such insights, log into www.international-maths-challenge.com.

*Credit for article given to Alex Wilkins*