In Lewis Carroll’s Through the Looking-Glass, the Red Queen tells Alice, “It takes all the running you can do, to keep in the same place.” The race between innovation and obsolescence is like this.

Recent evidence about the slowing of technological and scientific progress in contrast to the accelerating epidemiological risks in a globalized world—in the opposite direction—indicates the importance of the relative rates of innovation and obsolescence.

When does innovation outpace, or fail to outpace, obsolescence? Understanding this dynamic is nascent, and the way that innovation is discussed is largely fragmented across fields. Despite some qualitative efforts to bridge this gap, insights are rarely transferred.

In research led by Complexity Science Hub (CSH), Eddie Lee and colleagues have taken an important step towards building those bridges with a quantitative mathematical theory that models this dynamic.

The paper, “Idea engines: Unifying innovation & obsolescence from markets & genetic evolution to science,” is published in Proceedings of the National Academy of Sciences.

“You could say this is an exercise in translation,” says Lee, the first author of the paper. “There’s a plethora of theories on innovation and obsolescence in different fields: from economist Joseph Schumpeter’s theory of innovation, to other ideas proposed by theoretical biologist Stuart Kauffman, or philosopher of science Thomas Kuhn. Through our work, we try to open the doors to the scientific process and connect aspects of the different theories into one mathematical model,” explains Lee, a postdoc researcher at CSH.

Space of the possible, and its boundaries

Lee, together with Geoffrey West and Christopher Kempes at the Santa Fe Institute, conceives of innovation as expanding the space of the possible while obsolescence shrinks it. The “space of the possible” encompasses the set of all realized potentialities within a system.

“Within the space of the possible, you might think of different manufacturing technologies available in firms. All the living mutation species would be a good example in biology. In science, you might think of scientific theories that are feasible and empirically supported,” says Lee.

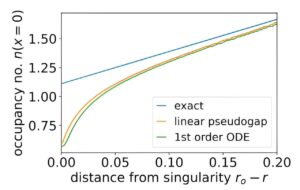

The space of the possible grows as innovations are pulled in from the “adjacent possible,” Stuart Kauffman’s term for the set of all things that lie one step away from what is possible. Lee and his co-authors compare this with an obsolescent front, which is the set of all things that are on the verge of being discarded.

Three possible scenarios

Based on this picture of the space of the possible, the team modeled a general dynamics of innovation and obsolescence to identify three possible scenarios. There is an ever-expanding scenario, where the possibilities agents are capable of growth without end. Schumpeterian dystopia is the opposite of this world, where innovation fails to outpace obsolescence. A third scenario follows the original Schumpeterian concept of creation and destruction, in which new ways of production survive by eliminating old ones.

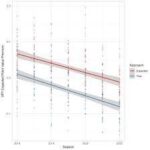

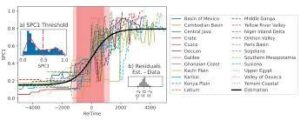

The model was tested with real-world data from a variety of fields, from measures of firm productivity to COVID-19 mutations and scientific citations. Thus, the researchers were able to bring together examples that have heretofore been considered in isolation from one another. Both the model and the data are for the average set of dynamics rather than focusing on specific innovations, which allows for the generalization emphasized in the paper.

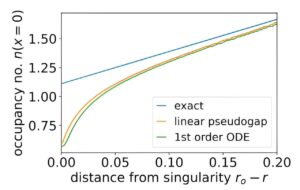

“We saw a remarkable similarity between all the data, from economics, biology, and science of science,” states the CSH researcher. One key discovery is that all the systems seem to live around the innovative frontier. “Moreover, agents at the boundary of innovative explosion, whether close to it or far away, share the same characteristic profile,” adds Lee, where few agents are innovative and many are near obsolescence. West likens this to systems living on the “edge of chaos,” where a small change in the dynamics can lead to a large change in the state of the system.

Universal phenomenon

The novel approach could transform our understanding of the dynamics of innovation in complex systems. By trying to capture the essence of innovation and obsolescence as a universal phenomenon, the work brings divergent viewpoints together into a unified mathematical theory. “Our framework provides a way of unifying a phenomenon that has so far been studied separately with a quantitative theory,” say the authors.

“Given the critical role that innovation in all its multiple manifestations plays in society, it’s quite surprising that our work appears to be the first attempt to develop a sort of grand unified mathematical theory which is testable to understand its dynamics,” says West. “It’s still very crude but hopefully can provide a point of departure for developing a more detailed realistic theory that can help inform policy and practitioners.”

“We provide an average model of the combined dynamics of innovation and obsolescence,” says Kempes. “In the future it is exciting and important to think about how this average model meets up with detailed theories of how innovations actually occur. For example, how do current objects or technologies get combined to form new things in something like the recently proposed Assembly Theory?”

For more such insights, log into our website https://international-maths-challenge.com

Credit of the article given to Complexity Science Hub