Some scientists insist that the cause of all things exists at the most fundamental level, even in systems as complex as brains and people. What if it isn’t so?

There are seconds left on the clock, and the score is 0-0. Suddenly, a midfielder seizes possession and makes a perfect defence-splitting pass, before the striker slots the ball into the bottom corner to win the game. The moment will be scrutinised ad nauseam in the post-match analysis. But can anyone really say why the winners won?

One thing is for sure, precious few would attribute the victory to quantum mechanics. But isn’t that, in the end, all there is? A physicist might claim that to explain what happens to a football when it is kicked, the interactions of quantum particles are all you need. But they would admit that, as with many things we seek to understand, there is too much going on at the particle-level description to extract real understanding.

Identifying what causes what in complex systems is the aim of much of science. Although we have made amazing progress by breaking things down into ever smaller components, this “reductionist” approach has limits. From the role of genetics in disease to how brains produce consciousness, we often struggle to explain large-scale phenomena from microscale behaviour.

Now, some researchers are suggesting we should zoom out and look at the bigger picture. Having created a new way to measure causation, they claim that in many cases the causes of things are found at the more coarse-grained levels of a system. If they are right, this new approach could reveal fresh insights about biological systems and new ways to intervene – to prevent disease, say. It could even shed light on the contentious issue of free will, namely whether it exists.

The problem with the reductionist approach is apparent in many fields of science, but let’s take applied genetics. Time and again, gene variants associated with a particular disease or trait are hunted down, only to find that knocking that gene out of action makes no apparent difference. The common explanation is that the causal pathway from gene to trait is tangled, meandering among a whole web of many gene interactions.

The alternative explanation is that the real cause of the disease emerges only at a higher level. This idea is called causal emergence. It defies the intuition behind reductionism, and the assumption that a cause can’t simply appear at one scale unless it is inherent in microcauses at finer scales.

Higher level

The reductionist approach of unpicking complex problems into their constituent parts has often been fantastically useful. We can understand a lot in biology from what enzymes and genes do, and the properties of materials can often be rationalised from how their constituent atoms and molecules behave. Such successes have left some researchers suspicious of causal emergence.

“Most people agree that there is causation at the macro level,” says neuroscientist Larissa Albantakis at the University of Wisconsin-Madison. “But they also insist that all the macroscale causation is fully reducible to the microscale causation.”

Neuroscientists Erik Hoel at Tufts University in Massachusetts and Renzo Comolatti at the University of Milan in Italy are seeking to work out if causal emergence really exists and if so, how we can identify it and use it. “We want to take causation from being a philosophical question to being an applied scientific one,” says Hoel.

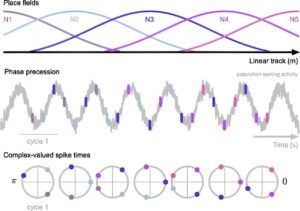

The issue is particularly pertinent to neuroscientists. “The first thing you want to know is, what scales should I probe to get relevant information to understand behaviour?” says Hoel. “There’s not really a good scientific way of answering that.”

Mental phenomena are evidently produced by complex networks of neurons, but for some brain researchers, the answer is still to start at small scales: to try to understand brain function on the basis of how the neurons interact. The European Union-funded Human Brain Project set out to map every one of the brain’s 86 billion neurons, in order to simulate a brain on a computer. But will that be helpful?

Some think not: all the details will just obscure the big picture, they say. After all, you wouldn’t learn much about how an internal combustion engine works by making an atomic-scale computer simulation of one. But if you stick with a coarse-grained description, with pistons and crankshafts and so on, is that just a convenient way of parcelling up all the atomic-scale information into a package that is easier to understand?

The default assumption is that all the causal action still happens at the microscopic level, says Hoel, but we simply “lack the computing power to model all the microphysical details, and that’s why we fixate on particular scales”. “Causal emergence,” he says, “is an alternative to this null hypothesis.” It says that, for some complex systems, looking at a coarse-grained picture isn’t just tantamount to data compression that dispenses with some detail. Instead, it is proposing that there can be more causal clout at these higher levels than there is below. Hoel reckons he can prove it.

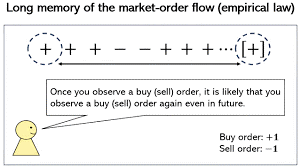

To do so, he first had to establish a method for identifying the cause of an effect. It isn’t enough to find a correlation between one state of affairs and another: correlation isn’t causation, as the saying goes. Just because the number of people eating ice creams correlates with the number who get sunburn, it doesn’t mean that one causes the other. Various measures of causation have been proposed to try to get to the root of such correlations and see if they can be considered causative.

In 2013, Hoel, working with Albantakis and fellow neuroscientist Giulio Tononi, also at the University of Wisconsin-Madison, introduced a new way to do this, using a measure called “effective information”. This is based on how tightly a scenario constrains the past causes that could have produced it (the cause coefficient) and the constraints on possible future effects (the effect coefficient). For example, how many other configurations of football players would have allowed that midfielder to release the striker into space, and how many other outcomes could have come from the position of the players as it was just before the goal was scored? If the system is really noisy and random, both coefficients are zero; if it works like deterministic clockwork, they are both 1.

Effective information thus serves as a proxy measure of causal power. By measuring and comparing it at different scales in simple model systems, including a neural-like system, Hoel and his colleagues demonstrated that there could be more causation coming from the macro than from the micro levels: in other words, causal emergence.

Quantifying causation

It is possible that this result might have been a quirk of the models they used, or of their definition of effective information as a measure of causation. But Hoel and Comolatti have now investigated more than a dozen different measures of causation, proposed by researchers in fields including philosophy, statistics, genetics and psychology to understand the roots of complex behaviour. In all cases, they saw some form of causal emergence. It would be an almighty coincidence, Hoel says, if all these different schemes just happened to show such behaviour by accident.

The analysis helped the duo to establish what counts as a cause. We might be more inclined to regard something as a genuine cause if its existence is sufficient to bring about the result in question. Does eating ice cream on its own guarantee a high chance of sunburn, for example? Obviously not. We can also assess causation on the basis of necessity: does increased sunburn only happen if more ice creams are consumed, and not otherwise? Again, evidently not: if the ice-cream seller takes a day off on a sunny day, sunburn can still happen. Causation can thus be quantified in terms of the probability that a set of affairs always and only leads to an effect.

Their work has its critics. Judea Pearl, a computer scientist at the University of California, Los Angeles, says that attempts to “measure causation in the language of probabilities” are outdated. His own models simply state causal structures between adjacent components and then use these to enumerate causal influences between more distantly connected components. But Hoel says that in his latest work with Comolatti, the measures of causation they consider include such “structural causal models” too.

Their conclusion that causal emergence really exists also finds support in recent work by physicists Marius Krumm and Markus Müller at the University of Vienna in Austria. They have argued that the behaviour of some complex systems can’t be predicted by anything other than a complete replay of what all of the components do at all levels; the microscale has no special status as the fundamental origin of what happens at the larger scales. The larger scales, they say, might then constitute the real “computational source” – what you might regard as the cause – of the overall behaviour.

In the case of neuroscience, Müller says, thoughts and memories and feelings are just as much “real” causal entities as are neurons and synapses – and perhaps more important ones, because they integrate more of what goes into producing actual behaviour. “It’s not the microphysics that should be considered the cause for an action, but its high-level structure.” says Müller. “In this sense we agree with the idea of causal emergence.”

Causal emergence seems to also feature in the molecular workings of cells and whole organisms, and Hoel and Comolatti have an idea why. Think about a pair of heart muscle cells. They may differ in some details of which genes are active and which proteins they are producing more of at any instant, yet both remain secure in their identity as heart muscle cells – and it would be a problem if they didn’t. This insensitivity to the fine details makes large-scale outcomes less fragile, says Hoel. They aren’t contingent on the random “noise” that is ubiquitous in these complex systems, where, for example, protein concentrations may fluctuate wildly.

As organisms got more complex, Darwinian natural selection would therefore have favoured more causal emergence – and this is exactly what Hoel and his Tufts colleague Michael Levin have found by analysing the protein interaction networks across the tree of life. Hoel and Comolatti think that by exploiting causal emergence, biological systems gain resilience not only against noise, but also against attacks. “If a biologist could figure out what to do with a [genetic or protein] wiring diagram, so could a virus,” says Hoel. Causal emergence makes the causes of behaviour cryptic, hiding it from pathogens that can only latch onto molecules.

Whatever the reasons behind it, recognising causal emergence in some biological systems could offer researchers more sophisticated ways to predict and control those systems. And that could in turn lead to new and more effective medical interventions. For example, while genetic screening studies have identified many associations between variations of different genes and specific diseases, such correlations have rarely translated into cures, suggesting these correlations may not be signposts to real causal factors. Instead of assuming that specific genes need to be targeted, a treatment might need to intervene at a higher level of organisation. As a case in point, one new strategy for tackling cancer doesn’t worry about which genetic mutation might have made a cell turn cancerous, but instead aims to reprogramme it at the level of the whole cell into a non-malignant state.

You decide?

Suppressing the influence of noise in biological systems may not be the only benefit causal emergence confers, says Kevin Mitchell, a neuroscientist at Trinity College Dublin, Ireland. “It’s also about creating new types of information,” he says. Attributing causation is, Mitchell says, also a matter of deciding which differences in outcome are meaningful and which aren’t. For example, asking what made you decide to read New Scientist is a different causal question to asking what made you decide to read a magazine.

Which brings us to free will. Are we really free to make decisions like that anyway, or are they preordained? One common argument against the existence of free will is that atoms interact according to rigid physical laws, so the overall behaviour they give rise to can be nothing but the deterministic outcome of all their interactions. Yes, quantum mechanics creates some randomness in those interactions, but if it is random, it can’t be involved in free will. With causal emergence, however, the true causes of behaviour stem from higher degrees of organisation, such as how neurons are wired, our brain states, past history and so on. That means we can meaningfully say that we – our brains, our minds – are the real cause of our behaviour.

That is certainly how neuroscientist Anil Seth at the University of Sussex, UK, sees things. “What one calls ‘real’ is of course always going to be contentious, but there is no objection in my mind to treating emergent levels of description as being real,” he says. We do this informally anyway: we speak of our thoughts, desires and goals. “The trick is to come up with sensible ways to identify and measure emergence,” says Seth. Like Hoel and Comolatti, he is pursuing ways of doing that.

Hoel says that the work demonstrating the existence of causal emergence “completely obviates” the idea that “all the causal responsibility drains down to the lower scale”. It shows that “physics is not the only science: there are real entities that do causal work at higher levels”, he says – including you.

Case closed? Not quite. While Mitchell agrees that causal emergence allows us to escape being ruled by the laws of quantum mechanics, he adds that what most people mean by free will requires an additional element: the capacity for conscious reflection and deliberate choice. It may be that we experience this sense of free will in proportion to the degree to which our higher-level brain states are genuine emergent causes of behaviour. Our perception of executing voluntary actions, says Seth, “may in turn relate to volition involving a certain amount of downward causality”.

In other words, you really are more than the sum of your atoms. If you think you made a choice to read this article, you probably did.

For more such insights, log into www.international-maths-challenge.com.

*Credit for article given to Philip Ball*